Did School Improvement Grants Work Anywhere?

Most people have seen the headlines. A rigorous study, released in 2017 found no significant effect of the $7 billion federal School Improvement Grant (SIG) program on student outcomes.

But that is only part of the picture. The story of SIG is far more complex. We should know. My AIR colleagues and I spent years studying SIG schools.

It’s true, we learned that on a national level, the SIG program did not improve student outcomes. But other studies found that SIG did work in some states, districts, or schools.

Why did SIG succeed in some places but not others? That is the $7 billion question.

First, some background.

In 2009, frustrated by the persistent level of low performance in the same schools—usually schools with high percentages of disadvantaged students—the U.S. Department of Education started the School Improvement Grant program.

Persistently low-performing schools received sizeable grants (up to $6 million over three years) to drive dramatic school change. They had to choose one of four school improvement models, each of which required a series of interventions intended to collectively catalyze school improvement. These interventions ranged from extended learning time and data-driven instruction to more out-of-school support and parent-engagement activities. In addition, in two of the most popular models most SIG schools were required to replace their principals and many were required to replace at least half their teaching staffs.

Sifting through the SIG research, one point is clear: SIG was implemented through states and districts, both of which could shape—for better or worse—the way SIG played out at the school level. One could argue that the success (or failure) of SIG was as much linked to state or district approaches to supporting schools as to federal policy.

At the state level, a rigorous study of SIG in Massachusetts demonstrated robust, statistically-significant impacts on student achievement at multiple grade levels, in both reading and math. And in Massachusetts, SIG funding proved to have a statistically significant effect on closing the achievement gap compared to other non-SIG schools. Another study of SIG in California suggested that schools that implemented the Turnaround Model that called for replacing half the teachers showed student achievement gains. In contrast, a study of SIG in Michigan detected no effect of SIG on student outcomes.

At the district level, the complexity of the SIG story becomes clearer. With colleagues at AIR, I studied a set of schools in the first cohort of SIG and followed them over three years. Unfortunately, several of these schools did not receive any support from their districts. Some districts did not appear to be acting in the best interest of these struggling schools – abruptly replacing highly-regarded principals or forcing the involuntary acceptance of transfer teachers who had not succeeded in other district schools.

As one school administrator of a SIG school explained, “Because so many changes are going on at the district office, you try to explain the SIG grant, and it still doesn’t stick because there are so many other things going on right next to them. And we’re so far [away]; it’s not on their radar. ... I think the district is the main challenge. ... I think we might be doomed to repeat history if no one in the district office knows what is going on here.”

But districts could be part of the solution. In 10 of the 25 SIG schools we studied, respondents (teachers and administrators) described their district as supportive, responsive, and accessible. And, a recently-released study of SIG found pronounced positive effects of SIG on student achievement in the San Francisco Unified School District.

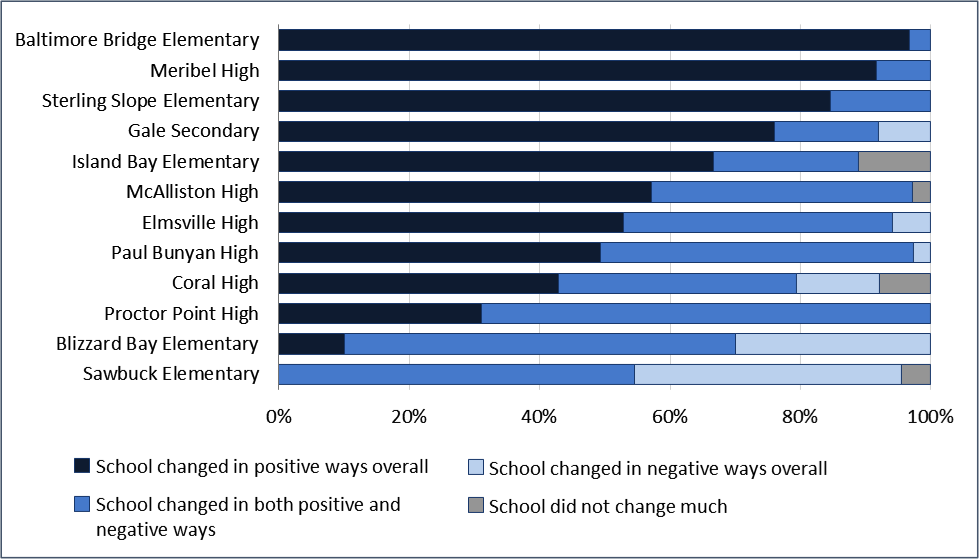

Just as there was variation in SIG implementation across states and districts, there was variation across schools: In some of the schools in our study, teachers credited SIG with stimulating “a U-turn” in performance, and a strong majority of teacher survey respondents thought their schools changed in positive ways over the three years of SIG. Elsewhere, teachers reported that their schools changed in negative ways or not at all.

Although the chart below reflects only a small sample of SIG schools, it helps explain why a larger national sample might show no overall impact on student outcomes – while hiding another story just below the surface. Look at some of our case study schools from across the country. When asked if their schools changed in positive ways, negative ways, or not at all during the three years of SIG, teachers’ reaction to SIG ranged from nearly all teachers reporting positive change overall to no teachers reporting positive changes. The average response might not be very encouraging, but to the teachers at the schools on top of the chart, SIG extended a lifeline.

SIG 2010-13 – Perceived Improvement

Source: Study of School Turnaround teacher survey, spring 2013

Notes: Includes 12 case study schools in six states. All school names are pseudonyms.

While there is no evidence of across-the-board student gains, it would be a mistake to regard the School Improvement Grant policy as an unambiguous failure. Rather, administrators, policymakers, and advocates would do well to ask themselves three questions:

- Why did SIG succeed in some states, districts, and schools?

- What state and district policies are needed to facilitate rather than stymie school improvement efforts—where were the missteps?

- And how can we better support students in these struggling schools?

*Study of School Turnaround: www.air.org/project/study-school-turnaround